The Real AI Question: How Do We Build Systems That Require Less Code?

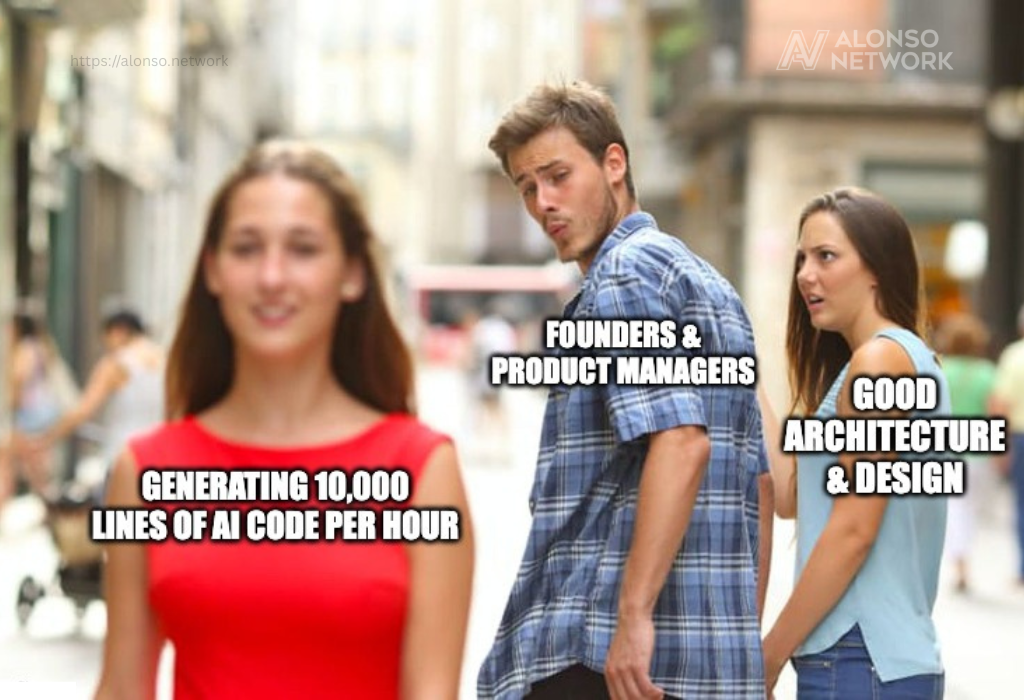

In the race to embrace AI, we're asking the wrong question.

It seems like everyone is obsessing over code generation speed. "How fast can we build?" "How many lines per hour can a model write?" It's the AI equivalent of measuring a lawyer by pages written, not cases won. The truth is, faster code output is only meaningful if the code itself is necessary, correct, and maintainable.

But what if the better question is: How do we build systems that require less code in the first place?

AI is a Tool, Not a Shortcut

AI can help. It's a tool—one that can assist, augment, even accelerate aspects of development. But it doesn’t replace the fundamentals. Good architecture, clear thinking, system design, and business understanding—these MUST come first. They always have.

When companies allow AI to autopilot mission-critical systems without sufficient guardrails, they gamble with real-world consequences.

And devs that have worked in large teams know well how it goes:

- Massive PRs

- Get through the first quarter, 15 min have passed

- Look at pipeline, all tests have passed

- "Approved and merged"

But my team is very disciplined, Mr. Alonso

Yes, I'm sure. But things get through the cracks even with good team leads when they're reviewing 5 other PRs and attending design meetings.

Let's consider the following real and hypothetical scenarios:

Microsoft:

Microsoft's own AI tools provide sobering examples of what happens when AI becomes a shortcut rather than a carefully managed tool.

GitHub Copilot, Microsoft's flagship coding assistant, demonstrates the real-world consequences of treating AI as a magic solution. Research shows that 40% of Copilot-generated code contains security vulnerabilities. More troubling, repositories using Copilot show a 40% higher rate of leaked secrets (API keys, passwords, credentials) compared to those without it—6.4% versus 4.6%.

This isn't just a theoretical risk. In 2024, over 39 million secrets were leaked across GitHub, with Microsoft's own analysis showing that Copilot can inadvertently reproduce and amplify existing security vulnerabilities in codebases. When developers use Copilot as a shortcut—accepting suggestions without rigorous review—they're not just writing faster; they're spreading security debt at unprecedented scale.

What if Microsoft was using AI to build internal system code in the same way?

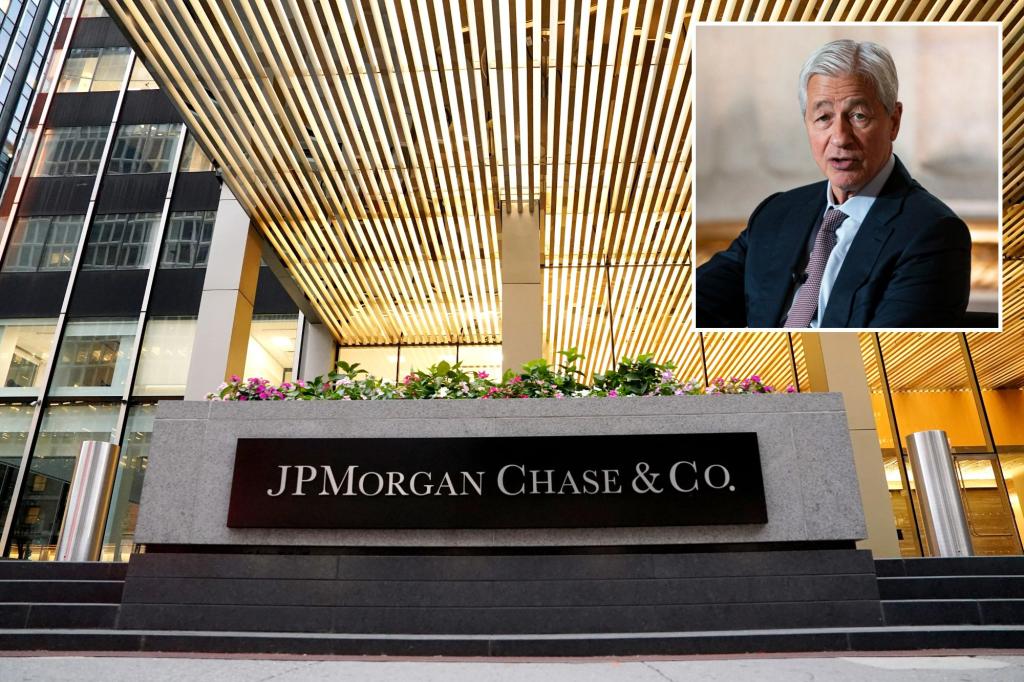

JPMorgan:

JPMorgan is experimenting with AI to help modernize legacy systems. This might include translating COBOL code into modern languages like Java. But it would be immensely irresponsible of them to hand the reins to AI without oversight. These systems aren’t simple scripts; they’re the backbone of global finance, encoding decades of institutional logic and risk controls.

Is it technically possible? It might be:

But it doesn't come without risks:

This is not to single out these companies—it's to highlight how normal this kind of behavior has become. The culture is shifting toward automating what we don't even fully understand ourselves. This can be dangerous.

The Real Metric: Systems Delivered, Not Code Written

What should we be measuring instead? Not "tokens per second" or "PRs merged per hour." Not even just "features shipped." We should be looking at secure, maintainable, and correct systems delivered per quarter. Systems that real people can trust. Systems that don't collapse when the team that built them leaves. Systems that don't require three engineers and an ops war room to debug every third week.

AI can help maintain these systems. It can assist with code summaries, upgrades, test generation (simple tests), log analysis, scaffolding, refactoring, and more.This alone increases the speed of development and helps ship code faster and onboard new team members more quickly. But it cannot—and should not—be expected to design, justify, or validate the very systems we rely on. That is what developers, QA, and business MUST do.

Less Code Is the Goal

The real power lies in reducing complexity—not generating it faster. Smaller, cleaner, well-thought-out systems are more valuable than large AI-generated ones. Systems that are simple, observable, and correct at their core require less maintenance, less documentation, and less heroism to operate. This code churn gets replaced with thinking and system design– which is still faster than undoing an AI generated nightmare long-term.

We should be asking:

- What decisions can eliminate an entire layer of code?

- What abstractions are truly necessary?

- How do we design for correctness from the start?

These are questions that require human experience, domain insight, and rigorous thinking—not a prompt.